Written by

Date published

Nov 7, 2023

While machine learning has been around for a while (my first startup 14 years ago was based on ML & natural language processing), this wave of AI (transformers & generative AI) feels like a tsunami. Its is a culmination of multiple waves listed below that are crashing at the same time:

- Natural Language as an interface (Voice to Text and Text To Speech)

- Generate human like content & code (Generative AI using existing patterns)

- Reasoning Capabilities (Able to break down into smaller issues)

- Hardware (GPUs)

Given how fast this space is moving, it is natural to feel daunted. So if you are looking for starter kit to get started, here are my recommendations. Feel free to tweet me if I am missing something.

👴🏽 Messiah’s Corner

Marc provides an optiistic view, must read!

Marc goes toe to toe with Ben Thompson

No better thought leader than Elon who takes a very long view

Folks that truly have the pulse of the ecosystem - Nat Friedman & Daniel Gross

💪🏼The Enablers

The company that started this rush - OpenAI

The Juggernaut that powers it all

Facebook learned from their mobile fiasco & enabled the opensource ecosystem

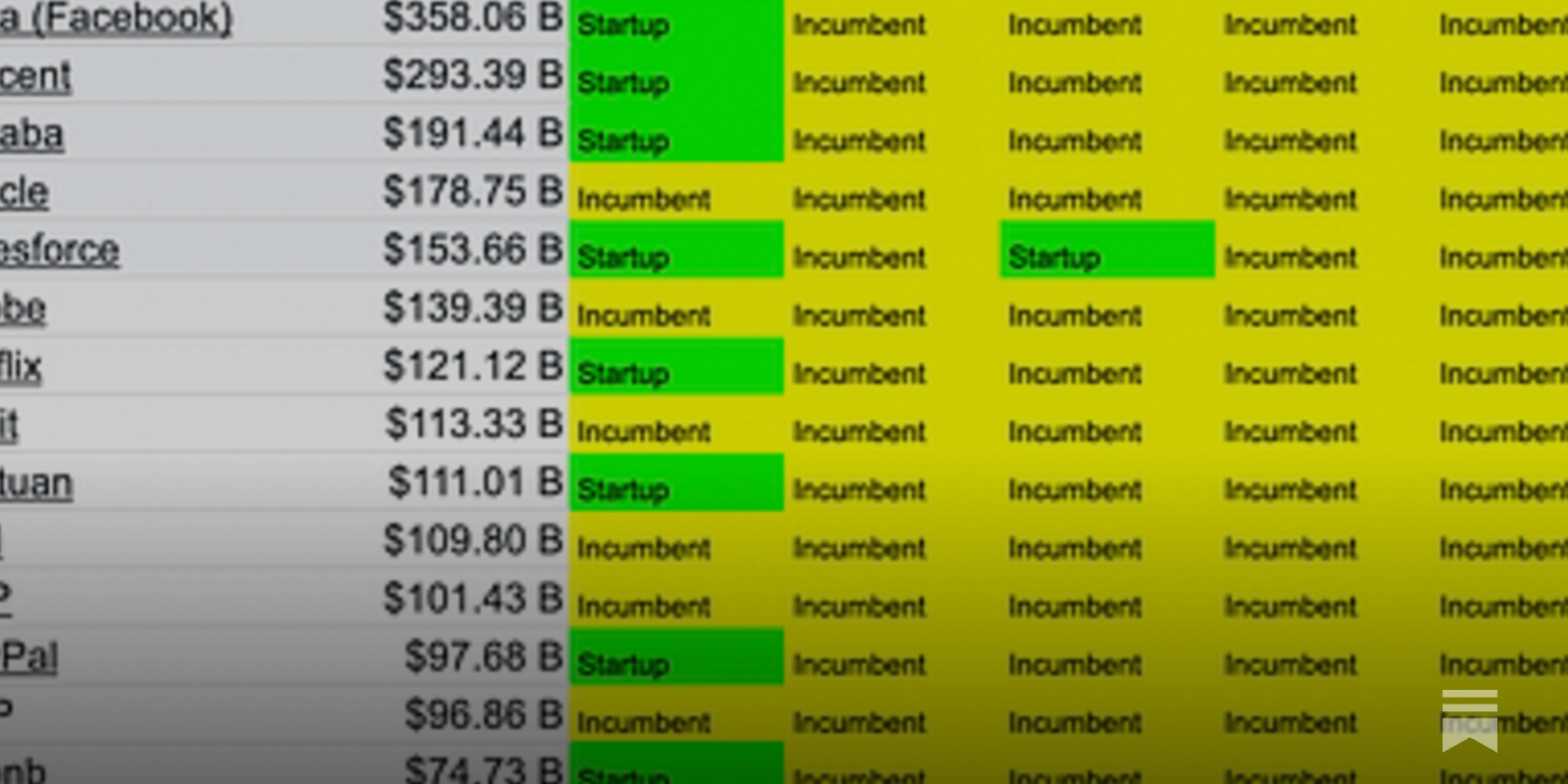

💰Gambler’s Paradise

😬 101

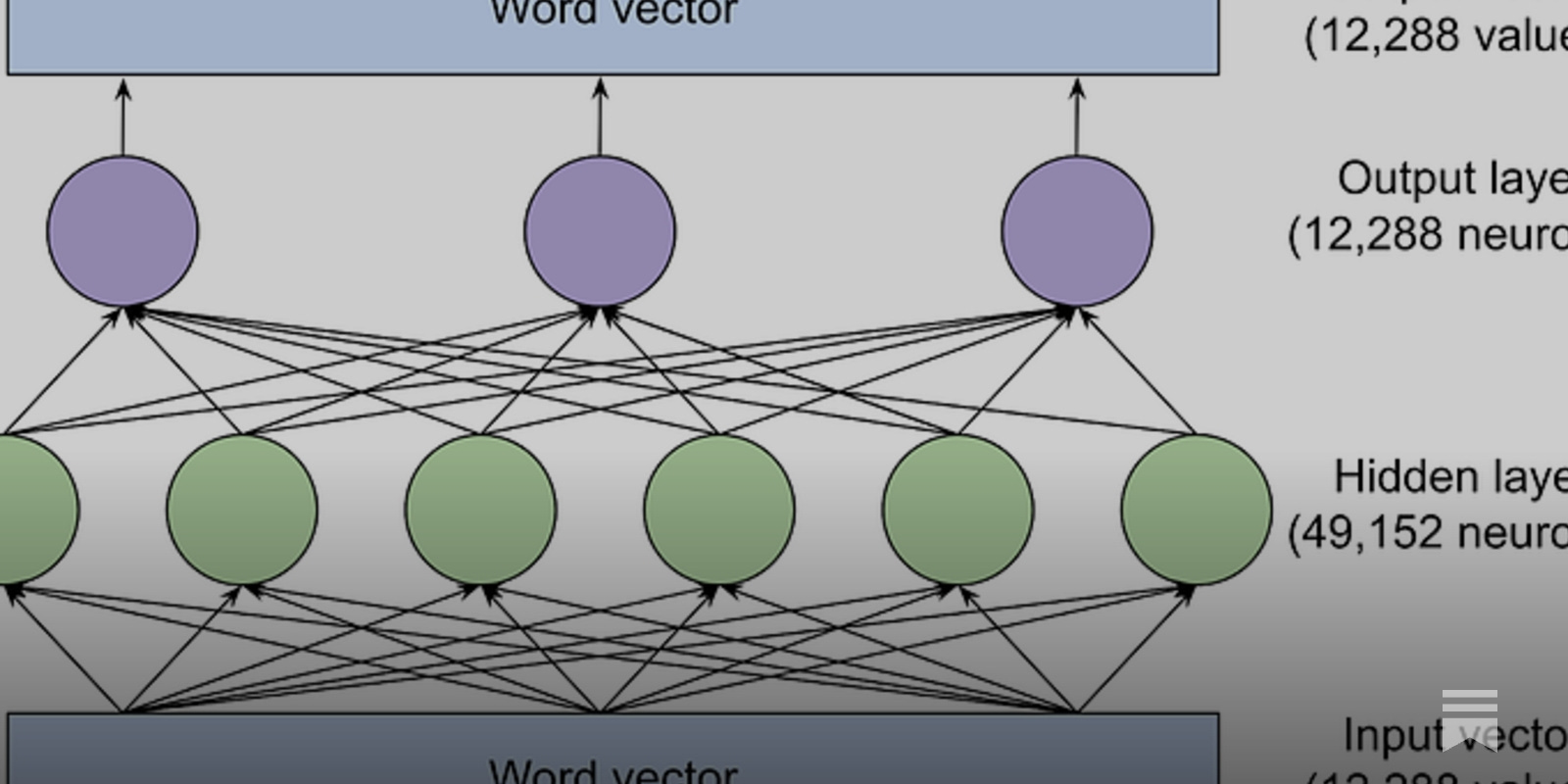

Into to Large Language Models

Large Language Models (LLMs) explained

Reinforcement Learning from Human Feedback (RHLF) explained

Various terms explained

🏗️Builder’s Toolkit

Sarah Guo Lays out a template for strategy

👷🏻♀️Product Manager’s Corner

🤔 Things to ponder

Impact on energy consumption

Impact on water resources needed for cooling

Impact of Generative AI on education

Data licensing issues that power LLMs

Human reinforced learning with International labor

📚Reading Corner

If you want to understand the foundations of ML from an economic perspective.

Historic context of ML & a compelling case about the opportunity

🌱 Staying upto date

This space moves at a lightening pace, my Twitter List to keep upto date

![[1hr Talk] Intro to Large Language Models](https://images.spr.so/cdn-cgi/imagedelivery/j42No7y-dcokJuNgXeA0ig/899493c1-c6ce-402d-a51c-5c17a8911361/maxresdefault/w=1920,quality=90,fit=scale-down)

/w=1920,quality=90,fit=scale-down)